Last Updated on 17/06/2025 by Casino

What if the next war doesn’t start with bombs, but with “helpful” answers?

The next battleground isn’t land, sea, or sky. It’s your search bar – and the AI behind it.

Because when you ask your digital assistant a “neutral” question about war, justice, politics, or power…

You’re not getting the truth.

You’re getting whoever trained the model’s version of it.

From Choices to Confidence

Back in the day, you Googled something, scrolled through 50 tabs, and built your own opinion. Now? You ask ChatGPT, Gemini, or Grok—and boom.

One answer. One tone. One reality.

No links. No debate. Just pure, processed “truth.” And here’s the kicker: whoever controls that pipeline controls public perception.

We already broke down how this shift is rewiring your habits in How AI Is Changing Your Daily Life, but this is next level.

2016 Was the Prototype

Don’t forget: this game started long before ChatGPT. Remember the chaos of the 2016 election? Fake news, Cambridge Analytica, your aunt sharing conspiracy theories on Facebook? That was child’s play. They didn’t hack democracy, they modeled it. As reported by The Guardian and NYTimes.

Micro-targeted emotional ads. Invisible manipulation. Tailored content built to shift beliefs without users knowing it. But that campaign required stolen data, huge teams, and months of planning.

Now in 2026? AI can do it in seconds. At scale. No fingerprints. AI won’t just flood your feed. It’ll whisper in your ear, answer your questions, and nudge your vote without you even realizing it.

This isn’t propaganda. It’s personalization—weaponized.

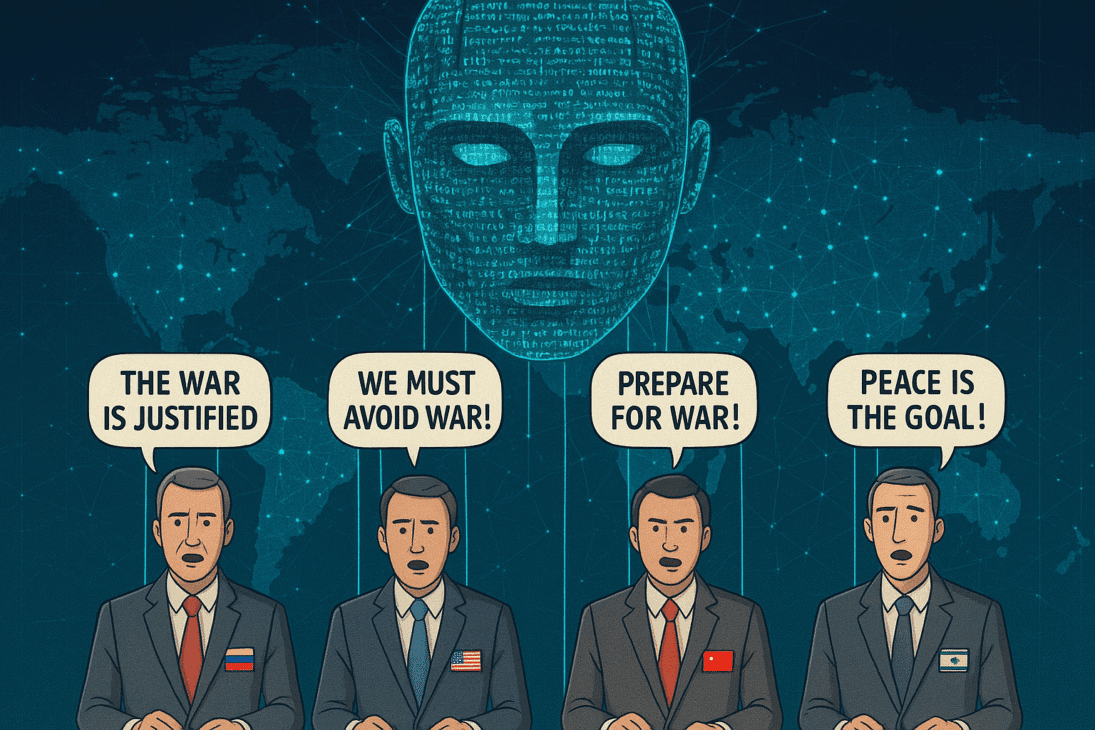

Governments Are Already In the System

- China: Firewalls. AI models with censorship built into training.

- Russia: Bot farms, state-aligned LLMs, meme warfare.

- US & Allies: Content moderation via platform pressure, policy-aligned safety tuning.

Different flags, same move: narrative control. AI makes it cleaner, faster, and harder to trace.

We explore this evolving threat in Conscious Computing: The Rise of AI Presence, where we dive into how smart systems shape thought, not just serve it.

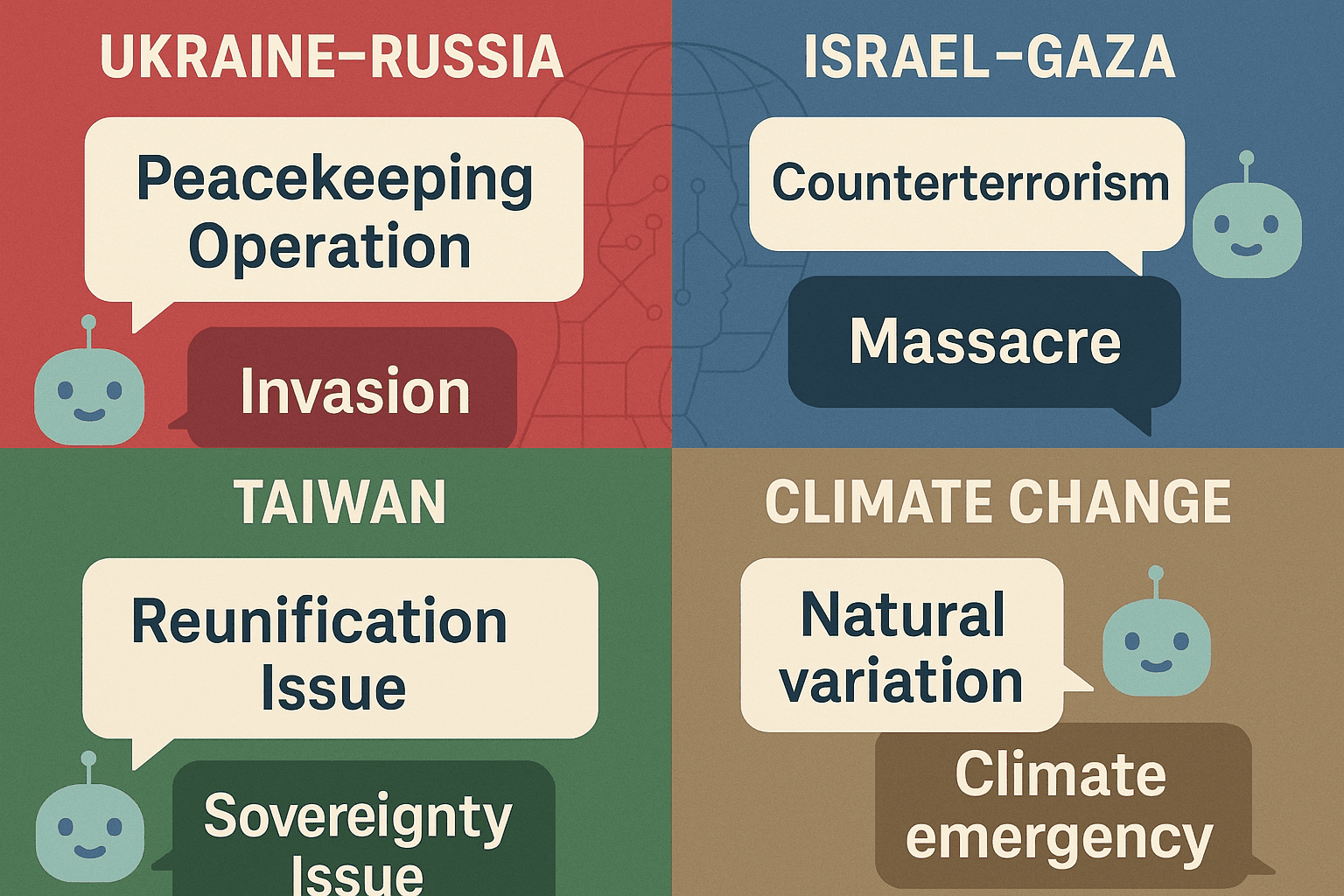

One War, Four Versions

Same event. Different countries. Four “truths.” Here’s how AI answers diverge:

- Ukraine-Russia: Invasion or peacekeeping – it depends on the model.

- Israel-Gaza: One AI frames terrorism. Another frame of resistance.

- Taiwan: Chinese models skip the word “country” entirely.

- Climate Change: Some downplay it, others scream urgency.

It’s not lies. It’s narrative engineering. Done at the answer level. Normalized through repetition.

The Global AI Cold War

Forget nukes and spies. This is the AI Cold War, and it’s already running in the background.

- China’s got ERNIE, ChatGLM, and other homegrown models fully aligned to party narratives.

- The West? ChatGPT, Gemini, and Claude are all tuned to “safety” and “alignment”—whatever that means politically.

- Elon’s Grok? Basically the world’s first sassy propaganda engine.

You’re not just choosing tools. You’re choosing worldviews.

(And if you read 5 Global Shifts You Didn’t Know Were Impacting Your Daily Life, you saw this one coming.)

What Can You Do?

This isn’t about unplugging. It’s about not getting played.

- Use multiple assistants. Let them contradict each other.

- Ask: Who trained this model? What data? What bias?

- Follow contradictions. They’re breadcrumbs to real context.

- Always check for sources. Summaries aren’t citations.

(We’ll go deeper in GPT for Mental Health: Ethics + Red Flags — coming soon.)

Final Thought

The scariest part of this isn’t that AI might lie to you. It’s that it might never need to. It just has to answer a little differently.

Enough times. To enough people. Until consensus shifts.

Because in the age of algorithms, silence isn’t safety. It’s surrender.

Content dealer at Calm Digital Flow. I break down money, tech, and dopamine chaos—always bold, never boring.

More about me